EKS Auto Mode で NVIDIA GPU を使ってみた

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

先日、EKS Auto Mode は GPU インスタンスを使う環境として良いのでは?という話をしつつ、Neuron インスタンス(Trainium/Inferentia)を使った記事を書いたのですが、Neuron インスタンスは GPU とは別物なので、変な記事になってしまったなと反省しております。

とはいえ、EKS Auto Mode は Neuron インスタンスでも NVIDIA 製 GPU でも同じように扱えるので、下記メリットはどちらでも享受できます。

- EKS ノードの管理が不要

- ドライバーがプリインストールされた EC2 を利用できる

- 最初から EBS CSI driver がセットアップされており、ブロックボリュームを使いやすい

- NodePool の仕組みで複数タイプのインスタンスを使い分けやすい(CPU/GPU、オンデマンド/スポットなど)

今回は改めて EKS Auto Mode で NVIDIA GPU を使ってみました。

EKS の作成

v1.31 で Auto Mode を有効化した EKS を作成します。

こちらの作成手順は既に何度も記載しているので、今回は省略します。

マネジメントコンソールで作成したパターン。

eksctl で作成したパターン。

Terraform で作成したパターン。

NodePool の作成

まず、NodePool を作成します。

GPU インスタンスは組み込みの NodePool には含まれていないため、独自の NodePool を作成する必要があります。

下記マニフェストファイルを適用します。

apiVersion: karpenter.sh/v1

kind: NodePool

metadata:

name: aiml

spec:

template:

spec:

requirements:

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand", "spot"]

- key: eks.amazonaws.com/instance-family

operator: In

values: ["g6", "g5", "g4dn"]

- key: eks.amazonaws.com/instance-size

operator: In

values: ["xlarge", "2xlarge"]

- key: eks.amazonaws.com/instance-gpu-manufacturer

operator: In

values: ["nvidia"]

nodeClassRef:

group: eks.amazonaws.com

kind: NodeClass

name: default

使えるインスタンスは公式ドキュメントに列挙されており、2024 年 1 月時点で機械学習向けインスタンスだと下記が利用できます。

p5, p4d, p3, p3dn, gr6, g6, g6e, g5g, g5, g4dn, inf2, inf1, trn1, trn1n

https://docs.aws.amazon.com/eks/latest/userguide/automode-learn-instances.html

今回はコストを意識して G 系のスポットインスタンスを利用します。

g4dn.xlarge なら、オンデマンドで 0.71 USD/hour なので、そこそこ気軽に検証できます。

GPU を利用したことがない場合は下記を上限緩和する必要があります。

- Running On-Demand G and VT instances

- All G and VT Spot Instance Requests

上限緩和が済んでいないと、NodeClaim だけ作成されて Node が作成されないのでご注意下さい。

GPU コンテナ起動

コンテナイメージは deep-learning-containers に公開されているものを利用します。

NodePool 側でインスタンスの条件は絞っているので、NodeSelector ではスポットインスタンスの条件付けだけします。

apiVersion: v1

kind: Pod

metadata:

labels:

role: training

name: training

spec:

nodeSelector:

karpenter.sh/capacity-type: spot

containers:

- command:

- sh

- -c

- sleep infinity

image: 763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training:2.5.1-gpu-py311-cu124-ubuntu22.04-ec2

name: training

resources:

limits:

nvidia.com/gpu: 1

8 分程度で、コンテナが利用できるようになりました。

% kubectl describe pod

Name: training

Namespace: default

Priority: 0

Service Account: default

Node: i-00f920dfadcdb4ccf/10.0.101.235

Start Time: Fri, 03 Jan 2025 23:00:59 +0900

Labels: role=training

Annotations: <none>

Status: Running

IP: 10.0.101.160

IPs:

IP: 10.0.101.160

Containers:

training:

Container ID: containerd://e35ee9aeaa6e2f80e2b06924aba742fa8f19f33e8605021e1988ac409d88a4ef

Image: 763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training:2.5.1-gpu-py311-cu124-ubuntu22.04-ec2

Image ID: 763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training@sha256:68ab3ffb92ac920daee05c3d3436f8a013de4358bf25983309bb93196e353798

Port: <none>

Host Port: <none>

Command:

sh

-c

sleep infinity

State: Running

Started: Fri, 03 Jan 2025 23:07:39 +0900

Ready: True

Restart Count: 0

Limits:

nvidia.com/gpu: 1

Requests:

nvidia.com/gpu: 1

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-4mtmx (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-4mtmx:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: karpenter.sh/capacity-type=spot

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

nvidia.com/gpu:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Nominated 8m35s karpenter Pod should schedule on: nodeclaim/aiml-x9829

Warning FailedScheduling 8m (x7 over 8m36s) default-scheduler no nodes available to schedule pods

Warning FailedScheduling 7m50s default-scheduler 0/1 nodes are available: 1 Insufficient nvidia.com/gpu. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.

Normal Scheduled 7m40s default-scheduler Successfully assigned default/training to i-00f920dfadcdb4ccf

Normal Pulling 7m39s kubelet Pulling image "763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training:2.5.1-gpu-py311-cu124-ubuntu22.04-ec2"

Normal Pulled 61s kubelet Successfully pulled image "763104351884.dkr.ecr.us-east-1.amazonaws.com/pytorch-training:2.5.1-gpu-py311-cu124-ubuntu22.04-ec2" in 6m37.578s (6m37.578s including waiting). Image size: 9979617914 bytes.

Normal Created 61s kubelet Created container training

Normal Started 60s kubelet Started container training

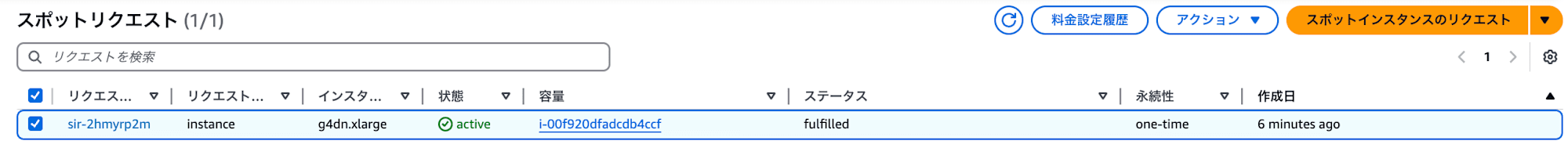

今回は g4dn.xlarge のスポットインスタンスが選択されて起動しました。

マニフェストファイルの中で GPU が使いたいと願うだけで用意してくれる感じで、めちゃめちゃ楽です。

起動したインスタンスの詳細な条件も確認します。

g4dn.xlarge を直接指定はしていないですが、コスト重視で選んでくれたようです。

% kubectl describe nodeclaim aiml-x9829

Name: aiml-x9829

Namespace:

Labels: app.kubernetes.io/managed-by=eks

eks.amazonaws.com/compute-type=auto

eks.amazonaws.com/instance-category=g

eks.amazonaws.com/instance-cpu=4

eks.amazonaws.com/instance-cpu-manufacturer=intel

eks.amazonaws.com/instance-cpu-sustained-clock-speed-mhz=2500

eks.amazonaws.com/instance-ebs-bandwidth=3500

eks.amazonaws.com/instance-encryption-in-transit-supported=true

eks.amazonaws.com/instance-family=g4dn

eks.amazonaws.com/instance-generation=4

eks.amazonaws.com/instance-gpu-count=1

eks.amazonaws.com/instance-gpu-manufacturer=nvidia

eks.amazonaws.com/instance-gpu-memory=16384

eks.amazonaws.com/instance-gpu-name=t4

eks.amazonaws.com/instance-hypervisor=nitro

eks.amazonaws.com/instance-local-nvme=125

eks.amazonaws.com/instance-memory=16384

eks.amazonaws.com/instance-network-bandwidth=5000

eks.amazonaws.com/instance-size=xlarge

eks.amazonaws.com/nodeclass=default

karpenter.sh/capacity-type=spot

karpenter.sh/nodepool=aiml

kubernetes.io/arch=amd64

kubernetes.io/os=linux

node.kubernetes.io/instance-type=g4dn.xlarge

topology.k8s.aws/zone-id=apne1-az1

topology.kubernetes.io/region=ap-northeast-1

topology.kubernetes.io/zone=ap-northeast-1c

Annotations: eks.amazonaws.com/nodeclass-hash: 12140320694897820171

eks.amazonaws.com/nodeclass-hash-version: v1

karpenter.sh/nodepool-hash: 4012513481623584108

karpenter.sh/nodepool-hash-version: v3

API Version: karpenter.sh/v1

Kind: NodeClaim

Metadata:

Creation Timestamp: 2025-01-03T14:00:04Z

Finalizers:

karpenter.sh/termination

Generate Name: aiml-

Generation: 1

Owner References:

API Version: karpenter.sh/v1

Block Owner Deletion: true

Kind: NodePool

Name: aiml

UID: 8a9a371f-d6e8-455b-a011-c0fa15f378d3

Resource Version: 76412

UID: 8cb6f95d-3283-4d94-a4c2-4b34c8b09324

Spec:

Expire After: 336h

Node Class Ref:

Group: eks.amazonaws.com

Kind: NodeClass

Name: default

Requirements:

Key: node.kubernetes.io/instance-type

Operator: In

Values:

g4dn.2xlarge

g4dn.xlarge

g5.2xlarge

g5.xlarge

g6.2xlarge

g6.xlarge

Key: karpenter.sh/capacity-type

Operator: In

Values:

spot

Key: eks.amazonaws.com/instance-family

Operator: In

Values:

g4dn

g5

g6

Key: eks.amazonaws.com/nodeclass

Operator: In

Values:

default

Key: karpenter.sh/nodepool

Operator: In

Values:

aiml

Key: eks.amazonaws.com/instance-size

Operator: In

Values:

2xlarge

xlarge

Key: eks.amazonaws.com/instance-gpu-manufacturer

Operator: In

Values:

nvidia

Resources:

Requests:

nvidia.com/gpu: 1

Pods: 1

Termination Grace Period: 24h0m0s

Status:

Allocatable:

Cpu: 3470m

Ephemeral - Storage: 111426258176

Memory: 14066Mi

nvidia.com/gpu: 1

Pods: 27

Capacity:

Cpu: 4

Ephemeral - Storage: 125G

Memory: 15155Mi

nvidia.com/gpu: 1

Pods: 27

Conditions:

Last Transition Time: 2025-01-03T14:00:04Z

Message: object is awaiting reconciliation

Observed Generation: 1

Reason: AwaitingReconciliation

Status: Unknown

Type: Initialized

Last Transition Time: 2025-01-03T14:00:04Z

Message: Node not registered with cluster

Observed Generation: 1

Reason: NodeNotFound

Status: Unknown

Type: Registered

Last Transition Time: 2025-01-03T14:00:06Z

Message:

Observed Generation: 1

Reason: Launched

Status: True

Type: Launched

Last Transition Time: 2025-01-03T14:00:04Z

Message: Initialized=Unknown, Registered=Unknown

Observed Generation: 1

Reason: ReconcilingDependents

Status: Unknown

Type: Ready

Image ID: ami-029163605de7a2489

Provider ID: aws:///ap-northeast-1c/i-00f920dfadcdb4ccf

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Launched 16s karpenter Status condition transitioned, Type: Launched, Status: Unknown -> True, Reason: Launched

Normal DisruptionBlocked 15s karpenter Cannot disrupt NodeClaim: nodeclaim does not have an associated node

作成した GPU を利用してみる

今回は PyTorch のリポジトリで公開されているサンプルスクリプトを実行してみます。

画像分類モデルのトレーニングを行う形になります。

import argparse

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.optim.lr_scheduler import StepLR

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

x = F.relu(x)

x = self.dropout2(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

1. * batch_idx / len(train_loader), loss.item()))

if args.dry_run:

break

def test(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(dim=1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

def main():

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=14, metavar='N',

help='number of epochs to train (default: 14)')

parser.add_argument('--lr', type=float, default=1.0, metavar='LR',

help='learning rate (default: 1.0)')

parser.add_argument('--gamma', type=float, default=0.7, metavar='M',

help='Learning rate step gamma (default: 0.7)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--no-mps', action='store_true', default=False,

help='disables macOS GPU training')

parser.add_argument('--dry-run', action='store_true', default=False,

help='quickly check a single pass')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

parser.add_argument('--save-model', action='store_true', default=False,

help='For Saving the current Model')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

use_mps = not args.no_mps and torch.backends.mps.is_available()

torch.manual_seed(args.seed)

if use_cuda:

device = torch.device("cuda")

elif use_mps:

device = torch.device("mps")

else:

device = torch.device("cpu")

train_kwargs = {'batch_size': args.batch_size}

test_kwargs = {'batch_size': args.test_batch_size}

if use_cuda:

cuda_kwargs = {'num_workers': 1,

'pin_memory': True,

'shuffle': True}

train_kwargs.update(cuda_kwargs)

test_kwargs.update(cuda_kwargs)

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

dataset1 = datasets.MNIST('../data', train=True, download=True,

transform=transform)

dataset2 = datasets.MNIST('../data', train=False,

transform=transform)

train_loader = torch.utils.data.DataLoader(dataset1,**train_kwargs)

test_loader = torch.utils.data.DataLoader(dataset2, **test_kwargs)

model = Net().to(device)

optimizer = optim.Adadelta(model.parameters(), lr=args.lr)

scheduler = StepLR(optimizer, step_size=1, gamma=args.gamma)

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(model, device, test_loader)

scheduler.step()

if args.save_model:

torch.save(model.state_dict(), "mnist_cnn.pt")

if __name__ == '__main__':

main()

まず、kubectl exec -it training -- /bin/bash で乗り込んで、GPU が利用できることを確認します。

root@training:/# python

Python 3.11.10 | packaged by conda-forge | (main, Oct 16 2024, 01:27:36) [GCC 13.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> print(torch.cuda.is_available())

True

無事 GPU が使えていますね。

次にサンプルスクリプトをダウンロードします。

root@training:/# git clone https://github.com/pytorch/examples.git pytorch_examples

Cloning into 'pytorch_examples'...

remote: Enumerating objects: 4344, done.

remote: Counting objects: 100% (28/28), done.

remote: Compressing objects: 100% (24/24), done.

remote: Total 4344 (delta 12), reused 4 (delta 4), pack-reused 4316 (from 2)

Receiving objects: 100% (4344/4344), 41.38 MiB | 21.48 MiB/s, done.

Resolving deltas: 100% (2159/2159), done.

トレーニングを行います。

データセットの読み込みで 403 エラーが出ていますが、同等の別サイトからダウンロードできているので気にしないことにします。

root@training:/# python -V

Python 3.11.10

root@training:/# python pytorch_examples/mnist/main.py

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz to ../data/MNIST/raw/train-images-idx3-ubyte.gz

100%|████████████████████████████████████████████████████████████████████████████████| 9.91M/9.91M [00:02<00:00, 4.88MB/s]

Extracting ../data/MNIST/raw/train-images-idx3-ubyte.gz to ../data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz to ../data/MNIST/raw/train-labels-idx1-ubyte.gz

100%|█████████████████████████████████████████████████████████████████████████████████| 28.9k/28.9k [00:00<00:00, 194kB/s]

Extracting ../data/MNIST/raw/train-labels-idx1-ubyte.gz to ../data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz to ../data/MNIST/raw/t10k-images-idx3-ubyte.gz

100%|█████████████████████████████████████████████████████████████████████████████████| 1.65M/1.65M [00:02<00:00, 752kB/s]

Extracting ../data/MNIST/raw/t10k-images-idx3-ubyte.gz to ../data/MNIST/raw

Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz

Failed to download (trying next):

HTTP Error 403: Forbidden

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz to ../data/MNIST/raw/t10k-labels-idx1-ubyte.gz

100%|████████████████████████████████████████████████████████████████████████████████| 4.54k/4.54k [00:00<00:00, 3.38MB/s]

Extracting ../data/MNIST/raw/t10k-labels-idx1-ubyte.gz to ../data/MNIST/raw

Train Epoch: 1 [0/60000 (0%)] Loss: 2.282550

Train Epoch: 1 [640/60000 (1%)] Loss: 1.384914

Train Epoch: 1 [1280/60000 (2%)] Loss: 0.967068

Train Epoch: 1 [1920/60000 (3%)] Loss: 0.587277

Train Epoch: 1 [2560/60000 (4%)] Loss: 0.349651

Train Epoch: 1 [3200/60000 (5%)] Loss: 0.484893

Train Epoch: 1 [3840/60000 (6%)] Loss: 0.249398

Train Epoch: 1 [4480/60000 (7%)] Loss: 0.643272

Train Epoch: 1 [5120/60000 (9%)] Loss: 0.228406

Train Epoch: 1 [5760/60000 (10%)] Loss: 0.298296

Train Epoch: 1 [6400/60000 (11%)] Loss: 0.290230

Train Epoch: 1 [7040/60000 (12%)] Loss: 0.203685

Train Epoch: 1 [7680/60000 (13%)] Loss: 0.322457

Train Epoch: 1 [8320/60000 (14%)] Loss: 0.161736

Train Epoch: 1 [8960/60000 (15%)] Loss: 0.272813

Train Epoch: 1 [9600/60000 (16%)] Loss: 0.218124

Train Epoch: 1 [10240/60000 (17%)] Loss: 0.278713

Train Epoch: 1 [10880/60000 (18%)] Loss: 0.247333

Train Epoch: 1 [11520/60000 (19%)] Loss: 0.242685

Train Epoch: 1 [12160/60000 (20%)] Loss: 0.121559

Train Epoch: 1 [12800/60000 (21%)] Loss: 0.265935

Train Epoch: 1 [13440/60000 (22%)] Loss: 0.091174

Train Epoch: 1 [14080/60000 (23%)] Loss: 0.143886

Train Epoch: 1 [14720/60000 (25%)] Loss: 0.154167

Train Epoch: 1 [15360/60000 (26%)] Loss: 0.353084

Train Epoch: 1 [16000/60000 (27%)] Loss: 0.339353

Train Epoch: 1 [16640/60000 (28%)] Loss: 0.078266

Train Epoch: 1 [17280/60000 (29%)] Loss: 0.143535

Train Epoch: 1 [17920/60000 (30%)] Loss: 0.123748

Train Epoch: 1 [18560/60000 (31%)] Loss: 0.198588

Train Epoch: 1 [19200/60000 (32%)] Loss: 0.157635

Train Epoch: 1 [19840/60000 (33%)] Loss: 0.099943

Train Epoch: 1 [20480/60000 (34%)] Loss: 0.218125

Train Epoch: 1 [21120/60000 (35%)] Loss: 0.185580

Train Epoch: 1 [21760/60000 (36%)] Loss: 0.373170

Train Epoch: 1 [22400/60000 (37%)] Loss: 0.343005

Train Epoch: 1 [23040/60000 (38%)] Loss: 0.153235

Train Epoch: 1 [23680/60000 (39%)] Loss: 0.153309

Train Epoch: 1 [24320/60000 (41%)] Loss: 0.046802

Train Epoch: 1 [24960/60000 (42%)] Loss: 0.096538

Train Epoch: 1 [25600/60000 (43%)] Loss: 0.100720

Train Epoch: 1 [26240/60000 (44%)] Loss: 0.249396

Train Epoch: 1 [26880/60000 (45%)] Loss: 0.250653

Train Epoch: 1 [27520/60000 (46%)] Loss: 0.171525

Train Epoch: 1 [28160/60000 (47%)] Loss: 0.165695

Train Epoch: 1 [28800/60000 (48%)] Loss: 0.023156

Train Epoch: 1 [29440/60000 (49%)] Loss: 0.128141

Train Epoch: 1 [30080/60000 (50%)] Loss: 0.186060

Train Epoch: 1 [30720/60000 (51%)] Loss: 0.245850

Train Epoch: 1 [31360/60000 (52%)] Loss: 0.179059

Train Epoch: 1 [32000/60000 (53%)] Loss: 0.156703

Train Epoch: 1 [32640/60000 (54%)] Loss: 0.099746

Train Epoch: 1 [33280/60000 (55%)] Loss: 0.132232

Train Epoch: 1 [33920/60000 (57%)] Loss: 0.112141

Train Epoch: 1 [34560/60000 (58%)] Loss: 0.163182

Train Epoch: 1 [35200/60000 (59%)] Loss: 0.071246

Train Epoch: 1 [35840/60000 (60%)] Loss: 0.139502

Train Epoch: 1 [36480/60000 (61%)] Loss: 0.214075

Train Epoch: 1 [37120/60000 (62%)] Loss: 0.207161

Train Epoch: 1 [37760/60000 (63%)] Loss: 0.035032

Train Epoch: 1 [38400/60000 (64%)] Loss: 0.042787

Train Epoch: 1 [39040/60000 (65%)] Loss: 0.058622

Train Epoch: 1 [39680/60000 (66%)] Loss: 0.410047

Train Epoch: 1 [40320/60000 (67%)] Loss: 0.060234

Train Epoch: 1 [40960/60000 (68%)] Loss: 0.097452

Train Epoch: 1 [41600/60000 (69%)] Loss: 0.117821

Train Epoch: 1 [42240/60000 (70%)] Loss: 0.085116

Train Epoch: 1 [42880/60000 (71%)] Loss: 0.056729

Train Epoch: 1 [43520/60000 (72%)] Loss: 0.045884

Train Epoch: 1 [44160/60000 (74%)] Loss: 0.049479

Train Epoch: 1 [44800/60000 (75%)] Loss: 0.058368

Train Epoch: 1 [45440/60000 (76%)] Loss: 0.219911

Train Epoch: 1 [46080/60000 (77%)] Loss: 0.028278

Train Epoch: 1 [46720/60000 (78%)] Loss: 0.039849

Train Epoch: 1 [47360/60000 (79%)] Loss: 0.113346

Train Epoch: 1 [48000/60000 (80%)] Loss: 0.105185

Train Epoch: 1 [48640/60000 (81%)] Loss: 0.317538

Train Epoch: 1 [49280/60000 (82%)] Loss: 0.027123

Train Epoch: 1 [49920/60000 (83%)] Loss: 0.099996

Train Epoch: 1 [50560/60000 (84%)] Loss: 0.099928

Train Epoch: 1 [51200/60000 (85%)] Loss: 0.157199

Train Epoch: 1 [51840/60000 (86%)] Loss: 0.152913

Train Epoch: 1 [52480/60000 (87%)] Loss: 0.177107

Train Epoch: 1 [53120/60000 (88%)] Loss: 0.033308

Train Epoch: 1 [53760/60000 (90%)] Loss: 0.085157

Train Epoch: 1 [54400/60000 (91%)] Loss: 0.059736

Train Epoch: 1 [55040/60000 (92%)] Loss: 0.196982

Train Epoch: 1 [55680/60000 (93%)] Loss: 0.175163

Train Epoch: 1 [56320/60000 (94%)] Loss: 0.239577

Train Epoch: 1 [56960/60000 (95%)] Loss: 0.123234

Train Epoch: 1 [57600/60000 (96%)] Loss: 0.067367

Train Epoch: 1 [58240/60000 (97%)] Loss: 0.068065

Train Epoch: 1 [58880/60000 (98%)] Loss: 0.065272

Train Epoch: 1 [59520/60000 (99%)] Loss: 0.022473

Test set: Average loss: 0.0489, Accuracy: 9844/10000 (98%)

==================== 長過ぎるので省略 ====================

Train Epoch: 14 [0/60000 (0%)] Loss: 0.000667

Train Epoch: 14 [640/60000 (1%)] Loss: 0.097416

Train Epoch: 14 [1280/60000 (2%)] Loss: 0.002931

Train Epoch: 14 [1920/60000 (3%)] Loss: 0.018966

Train Epoch: 14 [2560/60000 (4%)] Loss: 0.002120

Train Epoch: 14 [3200/60000 (5%)] Loss: 0.094113

Train Epoch: 14 [3840/60000 (6%)] Loss: 0.010101

Train Epoch: 14 [4480/60000 (7%)] Loss: 0.001241

Train Epoch: 14 [5120/60000 (9%)] Loss: 0.048978

Train Epoch: 14 [5760/60000 (10%)] Loss: 0.112938

Train Epoch: 14 [6400/60000 (11%)] Loss: 0.009444

Train Epoch: 14 [7040/60000 (12%)] Loss: 0.015535

Train Epoch: 14 [7680/60000 (13%)] Loss: 0.001834

Train Epoch: 14 [8320/60000 (14%)] Loss: 0.008719

Train Epoch: 14 [8960/60000 (15%)] Loss: 0.003821

Train Epoch: 14 [9600/60000 (16%)] Loss: 0.013853

Train Epoch: 14 [10240/60000 (17%)] Loss: 0.002987

Train Epoch: 14 [10880/60000 (18%)] Loss: 0.000478

Train Epoch: 14 [11520/60000 (19%)] Loss: 0.000975

Train Epoch: 14 [12160/60000 (20%)] Loss: 0.076186

Train Epoch: 14 [12800/60000 (21%)] Loss: 0.054088

Train Epoch: 14 [13440/60000 (22%)] Loss: 0.095574

Train Epoch: 14 [14080/60000 (23%)] Loss: 0.019683

Train Epoch: 14 [14720/60000 (25%)] Loss: 0.014405

Train Epoch: 14 [15360/60000 (26%)] Loss: 0.001300

Train Epoch: 14 [16000/60000 (27%)] Loss: 0.002141

Train Epoch: 14 [16640/60000 (28%)] Loss: 0.026139

Train Epoch: 14 [17280/60000 (29%)] Loss: 0.000496

Train Epoch: 14 [17920/60000 (30%)] Loss: 0.003039

Train Epoch: 14 [18560/60000 (31%)] Loss: 0.001561

Train Epoch: 14 [19200/60000 (32%)] Loss: 0.000351

Train Epoch: 14 [19840/60000 (33%)] Loss: 0.060833

Train Epoch: 14 [20480/60000 (34%)] Loss: 0.002630

Train Epoch: 14 [21120/60000 (35%)] Loss: 0.010868

Train Epoch: 14 [21760/60000 (36%)] Loss: 0.007680

Train Epoch: 14 [22400/60000 (37%)] Loss: 0.003565

Train Epoch: 14 [23040/60000 (38%)] Loss: 0.175288

Train Epoch: 14 [23680/60000 (39%)] Loss: 0.015968

Train Epoch: 14 [24320/60000 (41%)] Loss: 0.036710

Train Epoch: 14 [24960/60000 (42%)] Loss: 0.021130

Train Epoch: 14 [25600/60000 (43%)] Loss: 0.036840

Train Epoch: 14 [26240/60000 (44%)] Loss: 0.021997

Train Epoch: 14 [26880/60000 (45%)] Loss: 0.002322

Train Epoch: 14 [27520/60000 (46%)] Loss: 0.015408

Train Epoch: 14 [28160/60000 (47%)] Loss: 0.013879

Train Epoch: 14 [28800/60000 (48%)] Loss: 0.002331

Train Epoch: 14 [29440/60000 (49%)] Loss: 0.004856

Train Epoch: 14 [30080/60000 (50%)] Loss: 0.025145

Train Epoch: 14 [30720/60000 (51%)] Loss: 0.004917

Train Epoch: 14 [31360/60000 (52%)] Loss: 0.001568

Train Epoch: 14 [32000/60000 (53%)] Loss: 0.005067

Train Epoch: 14 [32640/60000 (54%)] Loss: 0.059743

Train Epoch: 14 [33280/60000 (55%)] Loss: 0.013705

Train Epoch: 14 [33920/60000 (57%)] Loss: 0.026091

Train Epoch: 14 [34560/60000 (58%)] Loss: 0.020486

Train Epoch: 14 [35200/60000 (59%)] Loss: 0.011995

Train Epoch: 14 [35840/60000 (60%)] Loss: 0.005763

Train Epoch: 14 [36480/60000 (61%)] Loss: 0.049923

Train Epoch: 14 [37120/60000 (62%)] Loss: 0.021059

Train Epoch: 14 [37760/60000 (63%)] Loss: 0.009348

Train Epoch: 14 [38400/60000 (64%)] Loss: 0.024582

Train Epoch: 14 [39040/60000 (65%)] Loss: 0.002445

Train Epoch: 14 [39680/60000 (66%)] Loss: 0.037113

Train Epoch: 14 [40320/60000 (67%)] Loss: 0.018202

Train Epoch: 14 [40960/60000 (68%)] Loss: 0.010362

Train Epoch: 14 [41600/60000 (69%)] Loss: 0.006468

Train Epoch: 14 [42240/60000 (70%)] Loss: 0.008705

Train Epoch: 14 [42880/60000 (71%)] Loss: 0.002657

Train Epoch: 14 [43520/60000 (72%)] Loss: 0.036460

Train Epoch: 14 [44160/60000 (74%)] Loss: 0.026741

Train Epoch: 14 [44800/60000 (75%)] Loss: 0.000931

Train Epoch: 14 [45440/60000 (76%)] Loss: 0.001090

Train Epoch: 14 [46080/60000 (77%)] Loss: 0.014249

Train Epoch: 14 [46720/60000 (78%)] Loss: 0.099528

Train Epoch: 14 [47360/60000 (79%)] Loss: 0.024378

Train Epoch: 14 [48000/60000 (80%)] Loss: 0.036011

Train Epoch: 14 [48640/60000 (81%)] Loss: 0.001983

Train Epoch: 14 [49280/60000 (82%)] Loss: 0.010713

Train Epoch: 14 [49920/60000 (83%)] Loss: 0.007878

Train Epoch: 14 [50560/60000 (84%)] Loss: 0.008163

Train Epoch: 14 [51200/60000 (85%)] Loss: 0.014262

Train Epoch: 14 [51840/60000 (86%)] Loss: 0.001632

Train Epoch: 14 [52480/60000 (87%)] Loss: 0.011517

Train Epoch: 14 [53120/60000 (88%)] Loss: 0.003155

Train Epoch: 14 [53760/60000 (90%)] Loss: 0.001497

Train Epoch: 14 [54400/60000 (91%)] Loss: 0.028443

Train Epoch: 14 [55040/60000 (92%)] Loss: 0.030236

Train Epoch: 14 [55680/60000 (93%)] Loss: 0.003975

Train Epoch: 14 [56320/60000 (94%)] Loss: 0.008705

Train Epoch: 14 [56960/60000 (95%)] Loss: 0.065356

Train Epoch: 14 [57600/60000 (96%)] Loss: 0.005833

Train Epoch: 14 [58240/60000 (97%)] Loss: 0.007950

Train Epoch: 14 [58880/60000 (98%)] Loss: 0.001189

Train Epoch: 14 [59520/60000 (99%)] Loss: 0.002572

Test set: Average loss: 0.0278, Accuracy: 9921/10000 (99%)

無事学習が終了しました!

まとめ

今回は EKS Auto Mode で GPU を利用してみました。

Neuron インスタンス同様、特別なセットアップ不要で使えて良い感じです。

機械学習向けインスタンスを起動する際は EKS Auto Mode が有力な選択肢になると思うので、是非試してみて下さい!